Faces DB

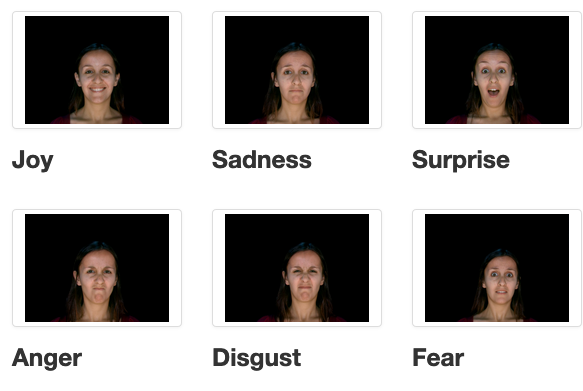

The database IMPA-FACE3D was created in 2008 to assist in the research of facial animation. In particular, for analysis and synthesis of faces and expressions. For this purpose, we take as basis the neutral face and the six universal expressions. The main feature of this dataset is a record of the geometric information with color.

Expressive Talking Head

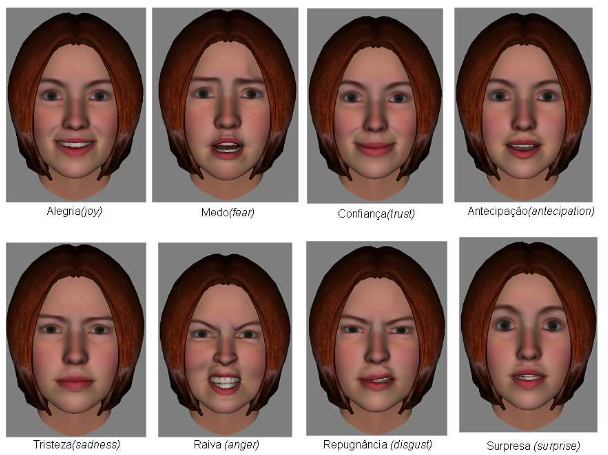

This project focuses on facial animations with synchronization between speech and expressions. As a product the implementation of a new talking head system was developed.

Paper: Expressive Talking Heads: A Study on Speech and Facial Expression in Virtual Characters

Eye Motions in Virtual Agents

This project developed model for producing eye movements in synthetic agents.The main goal was to improve the realism of facial animation of such agents during conversational interactions.

Faces with Emotion

This project implemented a system for generating dynamic facial expressions synchronized with speech, rendered using a tridimensional realistic face. It takes into account the speech and emotional features of a virtual character performance.

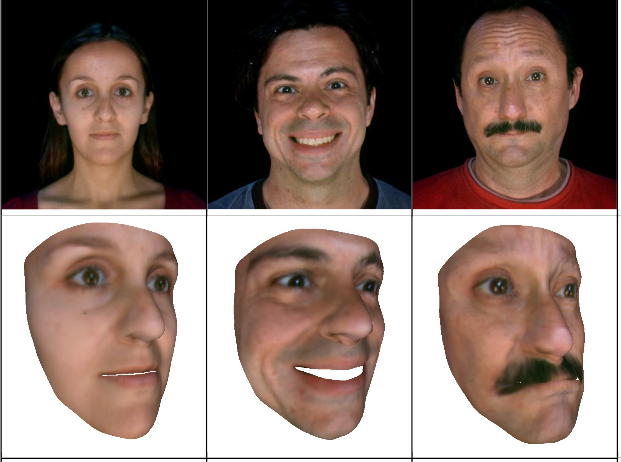

3D Face Reconstruction

This project developed new method of 3D face computational photography based on a facial expressions training dataset composed of both facial range images (3D geometry) and facial texture (2D photo).

Thesis: 3D human face reconstruction using principal components spaces

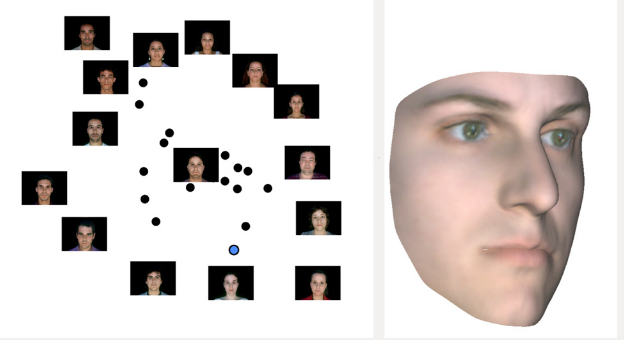

An Interface for Face Generation

The project uses a 3D human-faces dataset and a procedure to interactively reconstruct and generate new 3D faces. This approach creates face models from structured data, a task that would typically require the manipulation of hundreds of parameters.

Paper: Facing the High-dimensions: Inverse Projection with Radial Basis Functions